[This is one of the finalists in the 2025 review contest, written by an ACX reader who will remain anonymous until after voting is done. I’ll be posting about one of these a week for several months. When you’ve read them all, I’ll ask you to vote for a favorite, so remember which ones you liked]

My dad only actually enjoys about ten foods, nine of them beige. His bread? White. His pizza? Cheese. His meat? Turkey breast. And his side dish? Mashed potatoes.

As a child I hated mashed potatoes, despite his evangelization of them. I too was a picky eater growing up, but I would occasionally attempt to see what he saw in his beloved spuds. Whenever I tried a bite, the texture disgusted me: a gritty gruel of salty flakes coated with the oleic pall of margarine. The flavor reminded me of stale Pringles. I checked back once every couple years, but was repulsed by them every time.

I lobbied my parents for pasta or frozen tater tots or any other side I actually liked. Family dinners were often dichotomous, the same protein supplemented by two different carbs. “You are not my son,” my father would joke as he continued to put away his potato slop. “Maybe you’re not my father,” I’d shoot back when he shunned the rest of the family’s rice pilaf. Our starch preferences seemed irreconcilable.

As I entered my teen years, my palate expanded. After I’d tried and enjoyed brussels sprouts and sushi and escargot, my hatred of one of the most basic and inoffensive of all foods seemed silly. One day at a nice restaurant, I decided to give mashed potatoes one more try.

Upon taking my first bite, I realized three things:

1) Mashed potatoes are good.

2) Whatever my dad had been eating at home was not mashed potatoes.

3) My world is built on lies.

Mashed Potatoes are Good

Potatoes were domesticated several millennia ago at the dawn of agriculture in the rugged highlands near Lake Titicaca in modern-day Peru. Their origins lie in a wild family of tiny, bitter, pockmarked solanum roots, so full of glycoalkaloids that when foraged they had to be eaten alongside clay to soak up their toxins. From this paltry stock of nightshades, archaic peoples of the Andes gradually husbanded generous, nutritious, mild tubers that would remain the staple of the region’s foodways through several successive civilizations.

These roots resemble the ancestral stock of modern potatoes (source)

Andean peoples found all sorts of ways to prepare their potatoes. The most immediate method was to boil them into stews, soups, or mashes with local flavoring agents - herbs, salt, chilis. Earthenware ovens called huatias were used to bake them. With even more time, they could be fermented into tocosh, an edible paste with antibacterial properties.

To get the spuds to really last, though, they were subjected to a natural freeze-drying method that produced shrivelled potato pellets called chuño. Repeatedly frozen by bitter mountain nights, baked in the sun, and stomped on to remove water, chuño remains shelf stable for up to a decade and can be rehydrated into a spongy, earthy, slightly less nutritious potato-like object.

The ability to produce chuño on the Altiplano is thought to have contributed to the Incan empire’s military dominance of the region, since despite its generally unappealing gustatory properties it’s perfect for keeping troops fed on long marches. Chuño also allowed Incan civilization to stockpile surpluses against lean years and trade potatoes as commodities over great distances. It wasn’t the best way to eat a potato you harvested today, but it was the only way to turn a potato you have today into a potato you’ll have two years from now. That had immense value.

After the Spanish conquest and the Columbian exchange, the potato made gradual inroads into the Old World, where the previous best root vegetables were often comparatively less nutritious parsnips and turnips. There was an initial adjustment period: new cultivars capable of growing in shorter hours of daylight had to be developed, objections to the absence of tubers in the Bible needed to be quelled, and the French eventually had to concede that potatoes do not, as they at first believed, cause leprosy.

With these hurdles cleared, in the 19th century the potato spread out and became one of the easiest and most efficient ways to turn arable land into palatable calories the world over. National cuisines incorporated the new staple crop thoroughly, and it’s now hard to imagine Italian food without gnocchi, French sans vichyssoise, tapas without patatas bravas, a Eurasia bereft of aloo and rösti and colcannon and latkes.

Europe’s new potato lovers also took to the simple recipe of boiling ‘em and mashing ‘em. While South America had lacked the livestock for dairy, in Europe the potato mash soon achieved its ultimate form with the addition of milk and butter, which impart a smoother texture and richer taste. Hannah Glasse’s procedure published in 1747 in The Art of Cookery Made Plain and Easy is, minus the long s’s, still just about how I make them today:

Maſhed Potatoes.

BOIL your potatoes, peel them and put them into a ſauce-pan, maſh them well ; To two pounds of potatoes, put a pint of milk, a little ſalt, ſtir them well together, take care they don’t ſtick to the bottom, then take a quarter of a pound of butter, ſtir in and ſerve it up.

Nowhere was the potato embraced more thoroughly than in Ireland. In the early 19th century, extractive British demands on Irish agriculture to feed the armies fighting Napoleon reduced the available land for Irish farmers to feed themselves. Achieving maximum caloric density on the remaining land was paramount, and almost nothing is denser than the potato.

Potatoes quickly became an integral part of Irish life, so essential to the food systems of the island that when a blight hit them in the mid-1840s it led to one of the most devastating famines in history. The failure of the potato crops created starvation and emigration so profound in scale that the population of the island still has not recovered to its 1845 level almost two centuries later.

Among those millions of potato-starved emigres were my dad’s ancestors, who came to America in the decades following the famine. My great-grandfather, who bore the extremely Irish name Gerald FitzGerald, instilled in his children (including my grandmother) a reconstructed sense of Irish-American ethnic pride that included an affinity for corned beef and cabbage, Guinness beer, and the affordable practicality of mashed potatoes.

As the generations marched on, those mashed potatoes turned out to be one of the only things my grandmother would make that my exceedingly picky father would eat. Their creamy texture and subtle starchy taste didn’t trigger the “ew gross” reaction he had to so many other foods. Mashed potatoes, just like the ones Glasse had written about more than two centuries earlier, became his favorite side - and eventually, when I finally got to try them, one of mine too.

Whatever My Dad Had Been Eating at Home Was NOT Mashed Potatoes

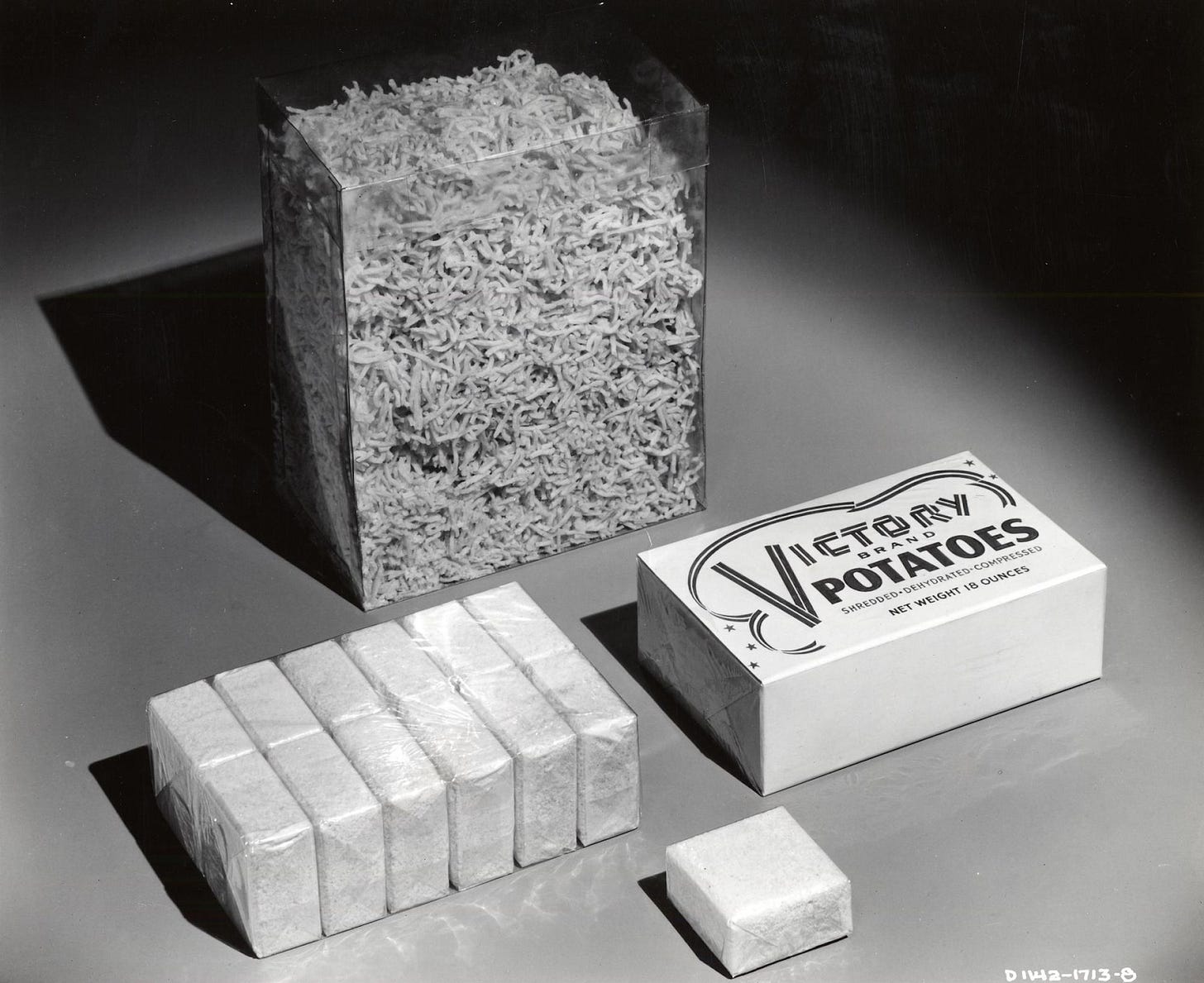

The chuño-chomping Incans were not the last military to rely on dehydrated potatoes for sustenance. In World War II, the US Army experimented with various forms of potato dehydration to help stretch supply lines. The easiest way to get a uniform potato commodity into the hands of G.I.s was to pulverize the potatoes into granules, dehydrate them, and then plan on bringing them back to life with boiling water in an imitation of “mashed potatoes”.

These shreds resemble the ancestral stock of modern Instant Mashed Potatoes (source)

The result was an affront. The potatoes were swimming in their own gluten, released during the granule-making process, which when mixed with imprecise water ratios made for a slop that was somehow both gluey and soupy. Immediately after the war, French’s (now best known for mustard) tried to introduce “instant mashed potatoes” as a consumer product category. America’s veterans were not having it. They didn’t want to be reminded of the awful slurry they’d had on the front.

The commercial fortunes of instant mashed potatoes began to turn around a decade later, however, when food scientists in the US and Canada converged on methods for producing dehydrated potato flakes rather than granules. The flakes had substantial advantages. They didn’t get as glutinous when reconstituted. Their geometry made them easier to dry quickly, on the order of minutes or even seconds. Using a multi-step process called the “Philadelphia Cook”, they could lock in a more natural flavor. When prepared on the stove with butter and milk, they were supposed to turn out almost as good as the real thing without any onerous prep work on the part of the consumer.

This raises the question, though, of why food scientists kept working on improving instant mashed potatoes a decade after they were no longer required for the war effort. If you’re no longer constrained by having to stick it to the Axis, why not return to Glasse-style maſhed potatoes in all circumstances?

This is a pattern that recurs frequently in reading about American foodways of the 20th century: choices and innovations made under extreme duress in the World War II economy didn’t fade away when the duress subsided. Instead they echoed back into American life a few years later, despite the lean conditions that birthed them being replaced by extreme abundance.

Why did America start eating like it was on a total war footing again when my parents’ generation was young? There are a lot of overlapping explanations. Here are a few:

Industrial inertia: Companies that had spun up to supply a vast army didn’t want to shut down overnight, so they necessarily pivoted to the consumer market. Some of these efforts succeeded at entrenching new consumer categories (fish sticks, canned peaches) while others (hamburgers-in-a-can) did not.

Genuine innovation: Technologies brought to maturity during and after the war, notably frozen food, offer novel consumer benefits that stand on their own merits.

Tastes fixed by rationing: Consumer habits are sticky. People who spent a couple years forced to buy margarine instead of rationed butter, or skim milk instead of rationed meat, got used to those items and wanted to continue buying them in greater quantities than the prewar status quo.

Pursuit of efficiency: As women entered the workforce en masse in the postwar era, the pool of hours available to be spent on domestic labor like cooking shrank. As much as any dishwasher or washing machine, convenience foods are labor-saving, productivity-enhancing technologies for the home.

This last factor is the only one that can explain the continued development of instant mashed potato technology. There were no potato-flaking interests during the war to have inertia; the instant mashed potatoes are not superior to their fresh antecedents; there was no ingrained consumer preference for an instant mashed potato product. It is only the desire to reduce time spent on food prep that could create “better instant mashed potatoes” as a commercially viable R&D space in the 1950s.

The other factors contributed to the unique awfulness of my father’s instant mashed potatoes, though.

Another WWII technological innovation, the cavity magnetron used in radar installations, led directly to the invention of the home microwave oven which began to proliferate widely in the 1970s. The microwave supercharged all “convenience food” trends, shortening not just prep time but cooking time as well. Uneven heating is hardly a concern when you can speed up your meals by a factor of ten.

Meanwhile, the existing postwar status of margarine and skim milk was greatly enhanced by the dietary fat scare of the 1980s and 1990s. These products displaced butter and whole milk as health-conscious consumers sought to eliminate saturated fats from their diets in a doomed effort to stave off the incipient obesity epidemic.

My parents, both already primed to accept these imitative products by my grandparents’ wartime preference formation, exclusively purchased margarine and skim milk for the household once they got married. And, pressed for time with two jobs and two kids, they frequently purchased instant mashed potatoes as well. And cooked them in the microwave.

What resulted was a second-order simulation of true maſhed potatoes, perverted and made unreal by the consumer echoes of the second world war. Real potatoes were substituted with desiccated flakes, real milk with a thin byproduct, real butter with refined vegetable oil, real mashing with the Philadelphia Cook, a real stovetop flame with microwave excitation. The measuring cup contained a substance gesturing at the notion of “mashed potatoes”, but no aspect of the original remained.

Yet because the name was the same, my father still believed he was eating the same dish my grandma made, the same dish his ancestors ate in Ireland, the same dish Glasse wrote about a quarter millennium ago. The appeal to him was undiminished. His body ate the slurry, but his mind still ate the maſhed potatoes of his youth.

My World is Built on Lies

In researching whether the ancient Andean peoples really did boil and mash potatoes, I came across this post which sheds light on the issues I have with my father’s instant mashed potatoes beyond their phenomenal unpleasantness when eaten.

There is a rhetorical sleight of hand happening in this Reddit post title.1 The phrasing implies that chuño resembles modern instant mashed potatoes in some way, that instant mashed potatoes are in some sense continuous with indigenous ways of potato-knowing. But there is no continuity of process, because the way chuño is created has no particular commonalities with the Philadelphia Cook beyond the removal of moisture. There is no continuity of form, for chuño actually looks like this:

(source)

And there is no continuity of purpose, either. To the Andean peoples, chuño was the only way of ensuring that their potato crops would be available well into the future. In America, our indigenous way of achieving this potato security is the entire miracle of modern agriculture and food distribution. I don’t need to stomp on freeze-dried potatoes in the Altiplano to make sure I’ll have access to potato nutrients next year. I just have to rely on the continued existence of Idaho and Target. No, despite what this redditor would like to believe, the instant mashed potato serves some other purpose.

That purpose is illuminated by the second rhetorical sleight of hand in the Reddit post, the one occurring on the box, in the form of the offset between the yellow lower-case “Instant” and the white majuscule “MASHED POTATOES”. “These are fundamentally maſhed potatoes,” this typography lies, “that happen to have been given the quality of ‘instant’”.

But they’re not. They’re a different thing entirely, a completely new evolutionary lineage of potato preparation that’s called “instant mashed potatoes” even though they’ve never been mashed. They are as distinct from Glasse’s maſhed potatoes as chuño is, but they masquerade as being the same, because that is their purpose - the fulfillment of a psychological need to consume something resembling the classic dish of “mashed potatoes” with slightly less effort than that dish requires.

This is a pedantic distinction - but it’s a distinction that had a big impact on my culinary life, because I believed the lie. My mental category of “mashed potatoes” was hijacked by this impostor and it made me think, for years and years, that I hated something that I actually would have liked all along. My preference formation was distorted by this warped, hyper-optimized fulfillment of my father’s crystallized preference. The expedient way to fulfill one generation’s desire locked the next generation out of experiencing that desire at all.

At this point in the review you might say, “what’s the big deal? It’s just mashed potatoes. Chill out.” Which, fair enough - if it were just mashed potatoes then 2500 words on them might be excessive. But the pattern I’ve described is far from unique to pureed tubers.

Consider an abstracted version of the saga of my father’s instant mashed potatoes. It has a few steps:

Humanity develops a Thing from ingredients that exist in the world.

Seeking efficiency at scale, an industry chops the ingredients of the Thing into teeny tiny bits.

Using an artificial emulsifier, the bits are bound back together into an aesthetically deficient but more convenient slurry that resembles the Thing.

Because it contains traces of the ingredients of the original Thing, this IMPish admixture is sold to us as if it were the original Thing.

Pared back to this level of abstraction, a surprising amount of stuff starts to seem like my father’s instant mashed potatoes.

The other foods in this category are obvious - McNuggets reconstituted out of pink slime, American cheese product, instant coffee, deli ham, Pringles minted from the very same potato flakes that go into IMPs. We’ve even developed a whole new health scare over them: “Ultra processed foods”2 are as demonized now as butter and whole milk were when my parents were young.

Expand the pattern to the built environment. Pressboard, particle board, and other reconstituted material composites likely make up a majority of new furniture sold in the US. These are an IMPish imitation of actual wood furniture. Take care while assembling not to ding your brittle sheetrock walls, an IMPish upgrade over lath and plaster. Often these interiors live inside an apartment building clad in a mish-mash of random ornament, anti-massing regulations demanding an IMPish simulation of a varied city block.

Intellectual goods can be IMPish. Reader’s Digest, sports “best-of” VHSes, textbooks stuffed with decontextualized excerpts, YouTube compilations, ChiveTV, listicles, social media feeds consisting of screenshots of other social media, Now That’s What I Call Music!, an entire ecosystem of actual cultural objects broken down into bits and clumped back together.

Corporate structures can be IMPish. When I visit a medical office it’s usually a confusing tangle of overlapping practitioners and practices operating out of the same physical address, an IMPish imitation of the archetypal doctor with a shingle in town. Similar quagmires abound when dealing with insurance, or contractors, or financial services.

Once you see the instant mashed potato antipattern it’s hard to stop. The isomorphisms are everywhere.

The gig economy makes IMPish jobs. Swiping apps produce IMPish flirting. Meta-studies are IMPish science. Ted Talks are IMPish symposia. Malls are IMPish shopping districts. Subdivisions are IMPish neighborhoods. Cruises are IMPish international travel, chopped into 14 hour chunks and emulsified with an ocean liner.

The internet scrapes together IMPish communities. We’re not atomized; we’re flaked. We’re Philadelphia Cooked, and we’re stewing here together in the microwave.

Large Language Models can gall on an aesthetic level because they are IMPish slurries of thought itself, every word ever written dried into weights and vectors and lubricated with the margarine of RLHF.3

Since World War II and the large-scale industrialization it fully unleashed, a core method driving ‘progress’ across many different fields of human endeavor has been to shred something real and reconstitute it into a faster, easier, less appealing IMPish substitute for what we used to make out of it. This is the parsimonious recipe for industry to fulfill our urges. We’ve got the food processor whirring, and absolutely everything is going in.

Why must the real be shredded to achieve these simulacra? Why can substitute products not be synthesized out of whole cloth? Because the integration of shreds of the real provides psychic camouflage, a credible way for the IMPish mimics to signal as their models:

The problem with this, of course, is the problem I had with my father’s instant mashed potatoes: the substitute is only able to satisfy a craving if you have the craving in the first place, and that requires direct experience with that which it is meant to replace. The memory of the thing being mimicked is a necessary ingredient for the IMPish imitation to work, the mental spell that allows the transmutation from IMPish Thing to Thing (original).

If you get to the party too late, if you never get to taste the maſhed potatoes, all you’re left with is a confusingly disappointing slurry going by the same name. When no distinction is drawn between the IMPish thing and its original, you don’t know what you’re missing. You don’t even know there’s anything to miss - after all, you’re still eating “mashed potatoes”!

If the IMPishness is pervasive enough, eventually you start to disbelieve that any of these reconstituted things could ever have been worthwhile, that any of the desires and preferences being fulfilled by these slurries ever could have been authentic. “Is this really what life is?”, you wonder, never having lived. “I don’t see why everyone was so jazzed about it.”

Cultivars of the Real

While formulating this review, I encountered a troubling congruence: the period during which my dad has been eating instant mashed potatoes consistently (roughly 1990-present) is about the same as the period between the onset of the Napoleonic Wars and the Irish potato famine in 1845. Why does one thirty-five-year pattern of potato consumption get to be considered authentic cultural heritage while another is self-deception? Aren’t they both equally contingent and ephemeral? Why should either be ‘real’?

This line of reasoning quickly starts to disclaim almost everything as fake. Masſed potatoes could only arise from the technologies of the age of the sail uniting old world tubers and new world dairy. They’ve only been around for a few centuries. Why should they get to be considered ‘real’? For that matter, why is the potato itself considered real? It’s a confection whipped up by the Andean farmers of the last few millennia. The only things that are really real on the Altiplano are nightshade and hunger.

I find such primitivism unhelpful in making the sort of distinction I aim to make here. Taken to the extreme it suggests that no hominid has experienced reality since the taming of fire. Some might agree that that’s the case! But as far as I’m concerned, at least some of the fruits of civilization are real too. I do think there is a way to conceive of the real that admits potatoes, that even admits masſed potatoes, but that gives legitimate reason to have grievance with IMPs.

On the Altiplano, the potato emerged through centuries of toil and discernment. Generation after generation of farmers chose only to propagate the solanum tubers that were bigger, tastier, less toxic, more nourishing. It is through such labor that every project of human civilization ultimately progresses - the ability, however imperfectly exercised, to act on the impulse “yes, more of this” when something is good, and “no, less of that” when it is bad.

As cultivators of the real, we get to choose not just among individual potatoes themselves, but among more abstract things like “memes concerning the preparation of potatoes”. Masſed potatoes were good, and so their meme propagated and strengthened the foodways it came in contact with. It was planted widely in the garden of the real. The WWII potato granule meme was bad, so it was discarded, cast out upon the rocks of the fake.

IMPish substitutes subvert this process of cultivation. In masquerading as other cultivars of meme, they weaken our stock both by sneaking into the garden despite their insalubrity, and by causing us, as I did for so long with maſhed potatoes, to reject the healthy older cultivars which they mimic.

Perhaps some of them are worth adding to our garden on their own merits. Perhaps many of them are! Many of the things I take for granted as ‘real’ are as far removed from their natural origins as a Yukon Gold is from those tiny nightshade roots, and in many cases I’m glad that we decided to keep them. But we must be clear-eyed about what each specimen is and what it is not in order to have any hope of making our decisions correctly.

Nowadays, I do not judge people for making use of instant mashed potatoes. I certainly take plenty of other prepared food culinary shortcuts myself. In the modern world we all make compromises for the sake of convenience. If we didn’t, we’d still be stomping on chuño to survive the winter.

But I do think it’s important to mind the distinction whenever you notice the IMPish pattern. There is a trick being played on you. You are not eating or watching or doing the Thing that your ancestors did, even if it contains the same ingredient and hides behind the same name. You’re planting something new in the garden of the real, and the nourishment it provides for your spirit, or the spirit of your children, may not be the same.

Fortunately, it is rare for even the most aggressive IMPish mimic to drive its model to extinction. It took over a decade, but I was eventually able to see past the deception of my father’s instant mashed potatoes and seek out the real version. Now I make maſhed potatoes regularly. My garden has one more good thing in it, and one less bad.

I even, on a recent visit to my grandmother’s house where I cooked St. Patrick’s Day dinner, got my dad to make real mashed potatoes himself, in a saucepan over a gas flame. It was the first time he’d ever done so. He enjoyed them.

***

In the interest of full fairness while writing this review, I purchased a plastic cup of my dad’s currently favored “Buttery Homestyle” Idahoan brand instant mashed potatoes for $1.99. The preparation was extraordinarily efficient; the aroma was decent; the taste was a reasonable facsimile; but the texture was all wrong - a smothering paste that coated my mouth and constrained my tongue like a straightjacket. 3/10 would not buy again.

Sources of potato facts (verified with primary sources linked within whenever possible):

https://tedium.co/2017/11/21/mashed-potato-history/

https://www.mentalfloss.com/article/627023/mashed-potatoes-history

https://spudsmart.com/spud-history-instant-mashed-potatoes/

Ignoring the error that Ainu potato treatments like munini-imo are not ‘ancient’ at all, deriving from the long tail of the Columbian exchange in the 16th through 19th centuries like every other Old World potato dish. Comparisons between Instant Mashed Potatoes and munini-imo are precisely as inapt as with chuño, for the same reasons.

“Processed” is a slippery term that evokes all kinds of chemical perversions, but the physical transformation of chopping into tiny bits is fundamental to the notion. Consider what a “food processor” does.

Claude, by the way, estimates that 30-40% of all mashed potatoes eaten in the US are the instant kind. ChatGPT says 25-35%.

.png)