Click here to go see the bonus panel!

Hovertext:

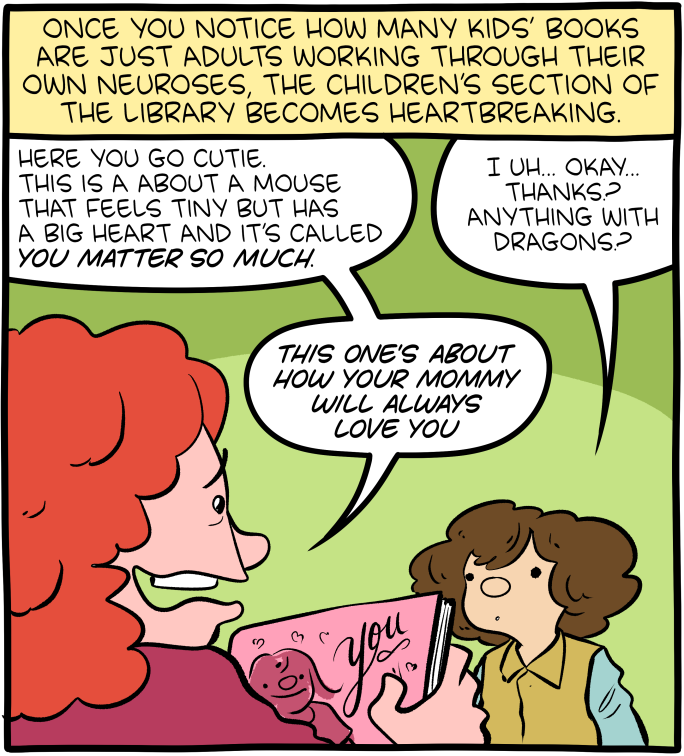

Writing a book to convince a child they're special is like writing a book to convince a fish it can swim.

Today's News:

Hovertext:

Writing a book to convince a child they're special is like writing a book to convince a fish it can swim.

Hovertext:

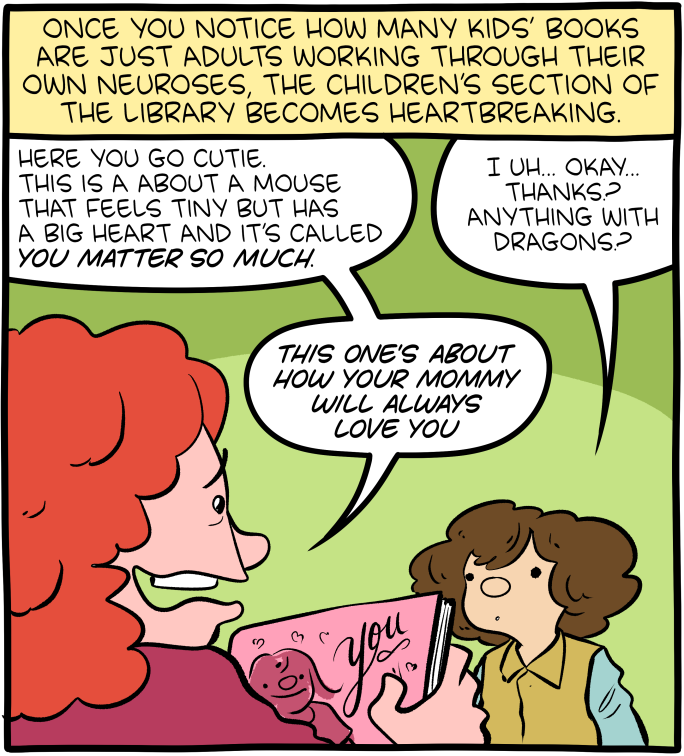

Absolute Midden is copyright SMBC Enterprises 2024 all rights reserved.

Enlarge (credit: OsakaWayne Studios)

As if we didn’t have enough reasons to get at least eight hours of sleep, there is now one more. Neurons are still active during sleep. We may not realize it, but the brain takes advantage of this recharging period to get rid of junk that was accumulating during waking hours.

Sleep is something like a soft reboot. We knew that slow brainwaves had something to do with restful sleep; researchers at the Washington University School of Medicine in St. Louis have now found out why. When we are awake, our neurons require energy to fuel complex tasks such as problem-solving and committing things to memory. The problem is that debris gets left behind after they consume these nutrients. As we sleep, neurons use these rhythmic waves to help move cerebrospinal fluid through brain tissue, carrying out metabolic waste in the process.

In other words, neurons need to take out the trash so it doesn’t accumulate and potentially contribute to neurodegenerative diseases. “Neurons serve as master organizers for brain clearance,” the WUSTL research team said in a study recently published in Nature.

Hovertext:

This is actually pretty close to a complaint lodged at Egil Skallagrímsson by a princess.

Hovertext:

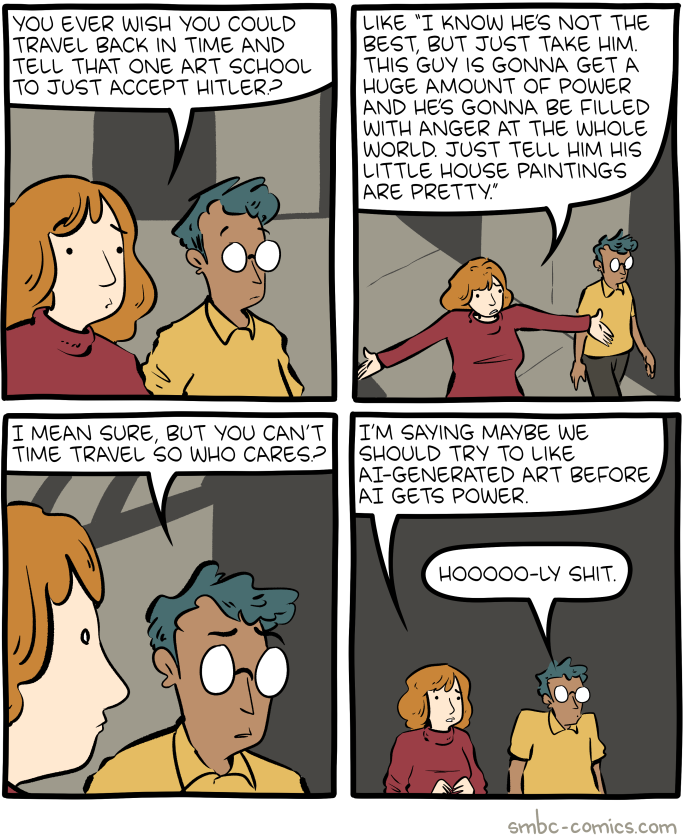

GREAT JOB AI, STAY WITH ART YOU'RE NAILING IT